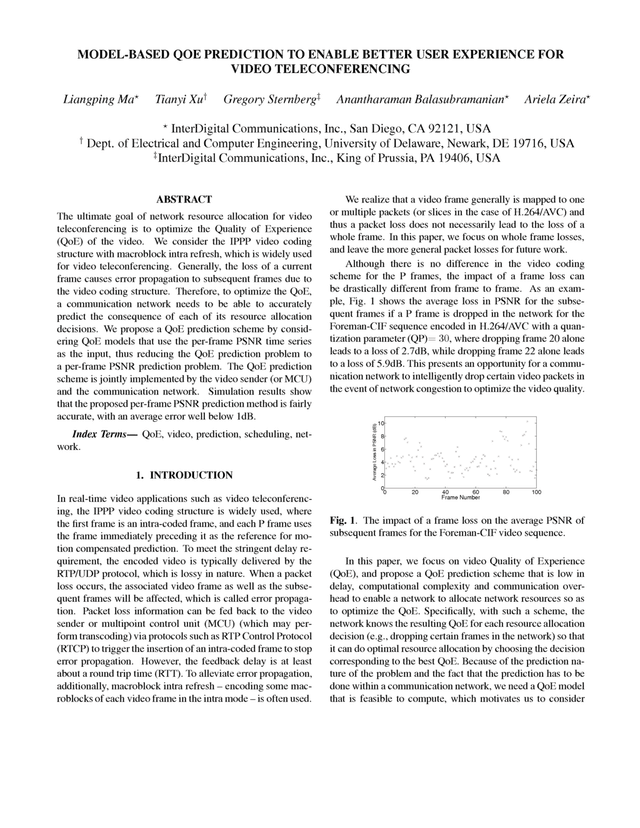

The ultimate goal of network resource allocation for video teleconferencing is to optimize the Quality of Experience (QoE) of the video. This paper proposes a QoE prediction scheme by considering QoE models that use the per-frame PSNR time series as the input, thus reducing the QoE prediction problem to a per-frame PSNR prediction problem.

Model Based QOE Prediction To Enable Better User Experience For Video Teleconferencing

Model Based QOE Prediction To Enable Better User Experience For Video Teleconferencing

Model Based QOE Prediction To Enable Better User Experience For Video Teleconferencing

Research Paper / Feb 2014

Related Content

White Paper /Oct 2025

In a new insight paper commissioned by InterDigital, CCS Insights details why energy efficiency is increasingly important in the video and media ecosystem, and introduces InterDigital’s cutting edge Pixel Value Reduction technology as an exciting solution to reduce energy consumption and extend watch time, enabling sustainability at scale across billions of devi…

White Paper /Oct 2025

“Bridge to 6G: Spotlight on 3GPP Release 20”

As live sports migrates from traditional broadcast to digital platforms, streaming is redefining how leagues, networks, and tech providers engage audiences and generate revenue. This transition brings both opportunity and complexity—from fragmented rights and shifting viewer expectations to significant technical demands around latency, scalability, and quality.

Webinar /Jun 2024

Blog Post /Dec 2025

Blog Post /Dec 2025

Blog Post /Oct 2025