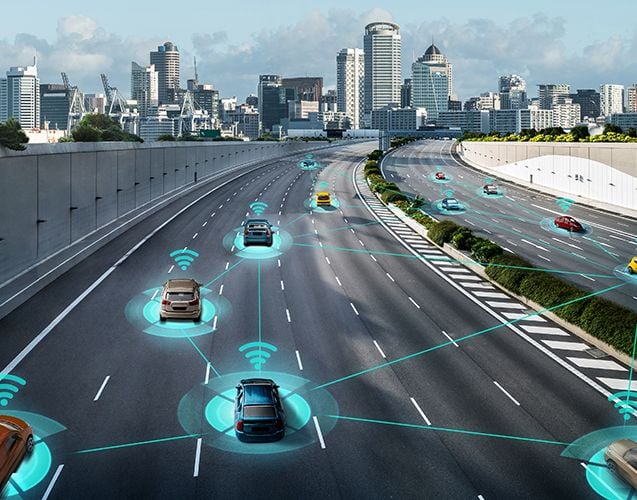

The ubiquitous deployment of 4G/5G technology has made it a critical infrastructure for society that will facilitate the delivery and adoption of emerging applications and use cases (extended reality, automation, robotics, to name but a few). These new applications require high throughput and low latency in both uplink and downlink for optimal performance, while coexisting with traditional downlink-heavy consumer applications. Successfully supporting these new use cases hinges on the network being able to allocate resources as efficiently as possible. In this paper, we utilize a 3GPP-compliant 5G testbed to analyze the limitations of legacy network resource allocation methods, which are based on instantaneous channel measurements, and examine the effect on throughput – a key performance indicator. We then propose a framework that allows resource allocation decisions to leverage predictions of network quality (computed at the connected devices), and study two different prediction methods that provide different degrees of reliability. We further validate our framework with real-world cellular data and demonstrate that with accurate channel metric forecast knowledge, the mean network throughput can improve by a factor of ∼1.8 over the baseline reactive approach based on best CQI policy, for the considered scenario.

PROMPT: Prediction of Channel Metrics for Proactive Optimization in Cellular Networks

Related INSIGHTS

Explore the latest research and innovations in wireless, video, and AI technologies.

WHITE PAPER

Bridge to 6G: Spotlight on 3GPP Release 20

“Bridge to 6G: Spotlight on 3GPP Release 20”, authored by ABI Research and commissioned by InterDigital, explores how 3G...

WHITE PAPER

Media Over Wireless: Networks for Ubiquitous Video

Media over Wireless: Networks for Ubiquitous Video explores the escalating demands and trends around consumer behavior f...

BLOG POST

OFCOM recognizes InterDigital Europe in Next Generation Mobi...

BLOG POST

InterDigital’s Jim Miller talks LTE unlicensed and Wi-Fi wit...

BLOG POST

InterDigital participated in IEEE ComSoc 5G Rapid Reaction S...

BLOG POST